This function transforms a data.frame of dated objects with associated data to a new data.frame which contains a row for each dating 'step' for each objects. Dating 'steps' can be single years (with `stepsize = 1`) or an arbitrary number that will be used as a guideline for the interval. It's expected that dates BCE are displayed as negative values while dates CE are positive values. Ignoring this will cause problems. If dates are provided in the wrong order in any number of rows they will automatically be switched.

The function along with a guide on how to use it and a case study is published in [Steinmann -- Weissova 2021](https://doi.org/10.1017/aap.2021.8).

Arguments

- DAT_df

a data.frame with 4 variables: * `ID` : An identifier for each row, e.g. an Inventory number (ideally character). * `group` : A grouping variable, such as type or context (ideally factor). * `DAT_min` : minimum dating (int/num), the minimum dating boundary for a single object, i.e. the earliest year the object may be dated to. * `DAT_min` : maximum dating (int/num), the maximum dating boundary for a single object, i.e. the latest year the object may be dated to. The columns _must_ be in this order, column names are irrelevant; each row _must_ correspond to one datable entity / object.

- stepsize

numeric, default is 1. Number of years that should be used as an interval for creating dating steps.

- calc

method of calculation to use; can be either one of "weight" (default) or "probability": * "weight": use the [published original calculation](https://doi.org/10.1017/aap.2021.8) for weights, * "probability": calculate year-wise probability instead (only reasonable when `stepsize = 1`)

- cumulative

FALSE (default), TRUE: add a column containing the cumulative probability for each object (only reasonable when `stepsize = 1`, and will automatically use probability calculation)

- verbose

TRUE / FALSE: Should the function issue additional messages pointing to possible inconsistencies and notify of methods?

Value

an expanded data.frame in with each row represents a dating 'step'. Added columns contain the value of each step, the 'weight' or 'probability'- value for each step, and (if chosen) the cumulative probability.

Examples

data("Inscr_Bithynia")

DAT_df <- Inscr_Bithynia[, c("ID", "Location", "DAT_min", "DAT_max")]

DAT_df_steps <- datsteps(DAT_df, stepsize = 25)

#> Using 'weight'-calculation (see https://doi.org/10.1017/aap.2021.8).

#> Warning: 1554 rows with NA-values in the dating columns will be omitted.

#> DAT_min and DAT_max at Index: 57, 68, 120, 173, 187, 238, 299, 300, 311, 312, 588, 590, 599, 679, 794, 798, 799, 828, 831, 833, 834, 837, 841, 878, 879, 908, 909, 914, 915, 931, 932, 933, 937, 938, 941, 942, 997, 1051, 1064, 1067, 1130, 1307, 1308, 1310, 1322, 1323, 1324 have the same value! Is this correct? If unsure, check your data for possible errors.

#> Warning: stepsize is larger than the range of the closest dated object at Index = 6, 12, 13, 17, 18, 19, 20, 21, 38, 39, 40, 43, 44, 57, 67, 68, 69, 70, 72, 75, 98, 101, 102, 106, 107, 112, 113, 114, 120, 122, 123, 129, 136, 137, 138, 142, 143, 146, 148, 149, 150, 168, 170, 172, 173, 175, 177, 178, 179, 180, 181, 182, 186, 187, 189, 190, 195, 203, 204, 205, 206, 207, 208, 209, 210, 212, 214, 215, 216, 217, 218, 234, 238, 240, 241, 242, 245, 261, 262, 292, 293, 296, 297, 298, 299, 300, 301, 302, 303, 304, 305, 306, 307, 308, 309, 310, 311, 312, 313, 314, 315, 316, 317, 318, 319, 320, 543, 546, 547, 548, 549, 581, 582, 583, 584, 585, 586, 588, 590, 591, 592, 593, 594, 595, 596, 597, 599, 602, 606, 672, 673, 674, 675, 676, 677, 678, 679, 680, 681, 682, 683, 684, 685, 788, 789, 790, 791, 792, 793, 794, 795, 796, 797, 798, 799, 800, 801, 802, 803, 804, 805, 806, 807, 808, 827, 828, 830, 831, 832, 833, 834, 835, 836, 837, 838, 839, 840, 841, 842, 843, 844, 845, 846, 847, 848, 849, 850, 866, 867, 870, 871, 872, 873, 874, 875, 878, 879, 880, 890, 902, 903, 904, 905, 906, 907, 908, 909, 910, 911, 912, 913, 914, 915, 916, 917, 918, 919, 920, 921, 922, 923, 924, 929, 930, 931, 932, 933, 934, 935, 936, 937, 938, 939, 940, 941, 942, 943, 944, 945, 946, 947, 948, 949, 957, 958, 961, 962, 963, 964, 965, 966, 967, 968, 969, 970, 989, 990, 995, 996, 997, 998, 999, 1000, 1001, 1002, 1003, 1004, 1005, 1006, 1007, 1008, 1009, 1010, 1011, 1012, 1013, 1014, 1015, 1016, 1029, 1030, 1031, 1035, 1036, 1051, 1053, 1054, 1055, 1062, 1064, 1065, 1066, 1067, 1093, 1094, 1095, 1096, 1097, 1098, 1099, 1122, 1124, 1125, 1126, 1127, 1129, 1130, 1133, 1225, 1267, 1268, 1269, 1270, 1271, 1272, 1273, 1274, 1275, 1276, 1277, 1278, 1290, 1297, 1302, 1306, 1307, 1308, 1310, 1311, 1315, 1322, 1323, 1324). This is not recommended. For information see documentation of get.step.sequence().

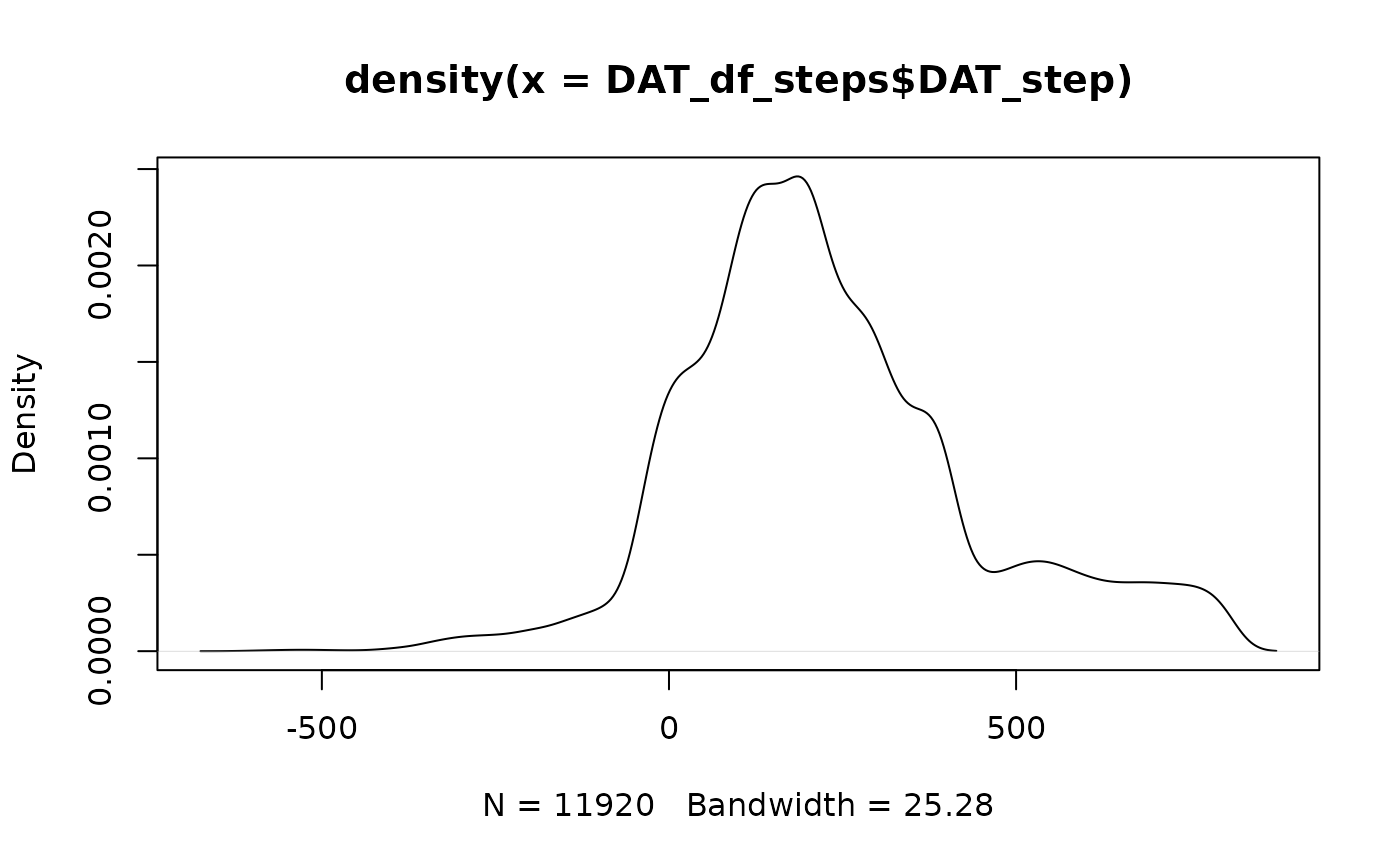

plot(density(DAT_df_steps$DAT_step))